Following up on last week’s release of the Leap Motion Interaction Engine, I’m excited to share Weightless: Remastered, a major update to my project that won second place in the first-ever 3D Jam. A lot has changed since then! In this post, we’ll take a deeper look at the incredible power and versatility of the Interaction Engine, including some of the fundamental problems it’s built to address. Plus, some tips around visual feedback for weightless locomotion, and designing virtual objects that look “grabbable.”

When I made the original Weightless, there wasn’t a stellar system for grasping virtual objects with your bare hands yet. Binary pinching or closing your hand into a fist to grab didn’t seem as satisfying as gently fingertip-tapping objects around. It wasn’t really possible to do both, only one or the other.

The Interaction Engine bridges that gap – letting you boop objects around with any part of your hand, while also allowing you to grab and hold onto floating artifacts without sacrificing fidelity in either. You can now actually grab the floating Oculus DK2 and try to put it on!

Image may be NSFW.

Clik here to view.

Interaction Engine Essentials

So what does the Interaction Engine actually do? At the most basic level, it makes it easy to design grab and throw interactions. But while the Interaction Engine can tell when an object is being grabbed, that’s only a tiny part of what it can do.

That’s because basic grabbing is pretty easy. You could probably write code in 15 minutes that manages to parent an object to the hand when the fingers are curled in a certain way. This binary solution has been around for a long time, but it completely falls apart when you add another hand and more objects.

What happens when you grab an object with one hand, while also pushing it with the other? What happens when you push a stack of objects into the floor? Push one object with another object? Grab a complex object? Grab one object from a pile? All of these can cause massive physics conflicts.

Suddenly you discover that hand and object mechanics can be incredibly difficult problems at higher levels of complexity. The problem has gone from two dimensions – one hand plus one object – to five or more.

The Interaction Engine is a fundamental step forward because it takes in the dynamic context of the objects your hands are near. This lets you grab objects of a variety of shapes and textures, as well as multiple objects near each other that would otherwise be ambiguous. As a result, the Interaction Engine makes things possible that you can’t find in almost any other VR demo with any other kind of controller. It’s also designed to be extensible, with interaction materials that can easily be customized for any object.

We’ll come back to the Interaction Engine later when we look at designing the brand-new Weightless Training Room. But first, here are some of the other updates to the core experience.

Locomotion UI using Detectors

Locomotion is one of the biggest challenges in VR – because anything other than walking is already unintuitive. Fortunately, in a weightless environment, you can take a few liberties. The locomotion method in Weightless involves the user putting both hands up, palms facing forward with fingers extended and pointed up, then gently pushing forward to float in the direction they’re facing.

Interactions like this are very difficult to communicate verbally. In the original, there was no UI in place for giving the user feedback on what they needed to do to begin moving.

Now with our Unity Detectors Scripts, giving users feedback on their current hand pose is extremely simple. You can set up a detector to check if the palms are facing forward (including setting a range around the target direction within which the detector is enabled or disabled) and have events fire whenever the conditions are met.

Image may be NSFW.

Clik here to view.

I hooked up Detectors to visual UI on the back of your gloves. These go from red to green when the conditions for EXTEND (fingers extended), UPRIGHT (fingers pointed up) and FORWARD (palms facing forward) are met. Green means go!

Pinch to Create Black Holes

In the original Weightless, you gained a “gravity ability” which let you attract floating objects to your hands with the press of a wrist-mounted button. This was fun, but often the swirling storm of objects would clutter your view, making it hard to see.

Image may be NSFW.

Clik here to view.

Now you can now pinch with both your hands close together to create a small black hole which has the same effect. Similar to the block creation interaction in Blocks, you can resize the black hole by stretching it out before releasing the pinch.

The Training Room

To truly explore the potential of the Interaction Engine, I wanted to design a new section built around its unique strengths. Demoing Blocks to hundreds of people, I found that many would turn gravity off and then try to hit floating objects with other objects. To the point that some wouldn’t take the headset off until they succeeded! This inspired me to design an experience around grabbing and throwing weightless objects at targets.

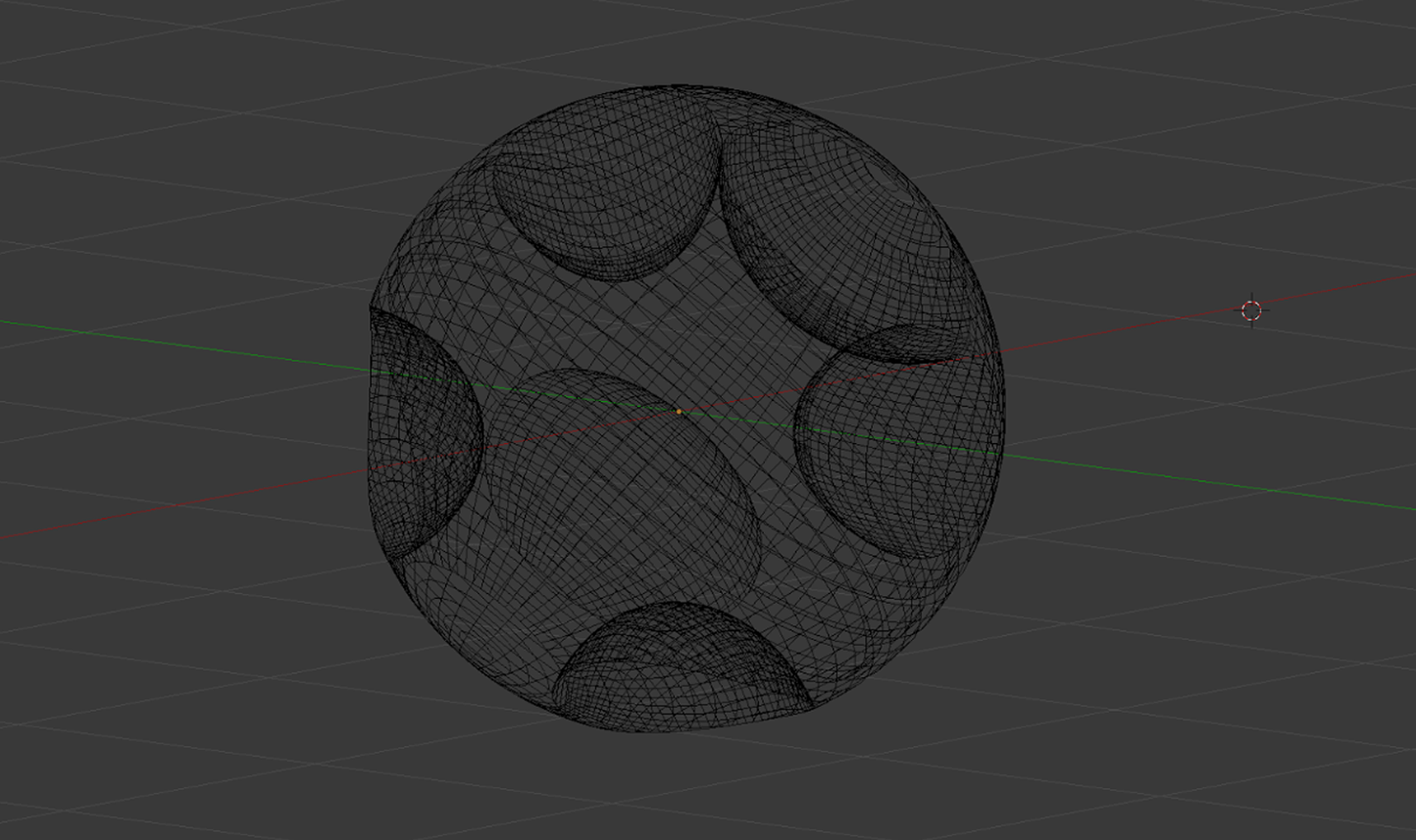

Grabbing and (Virtual) Industrial Design

While developing the Interaction Engine it became clear that everybody grabs objects differently and this is especially true in VR. Some people are very gentle, barely touching the perimeter of an object while others will quickly close their whole hand into a fist inside the object. The Interaction Engine has to handle both situations consistently.

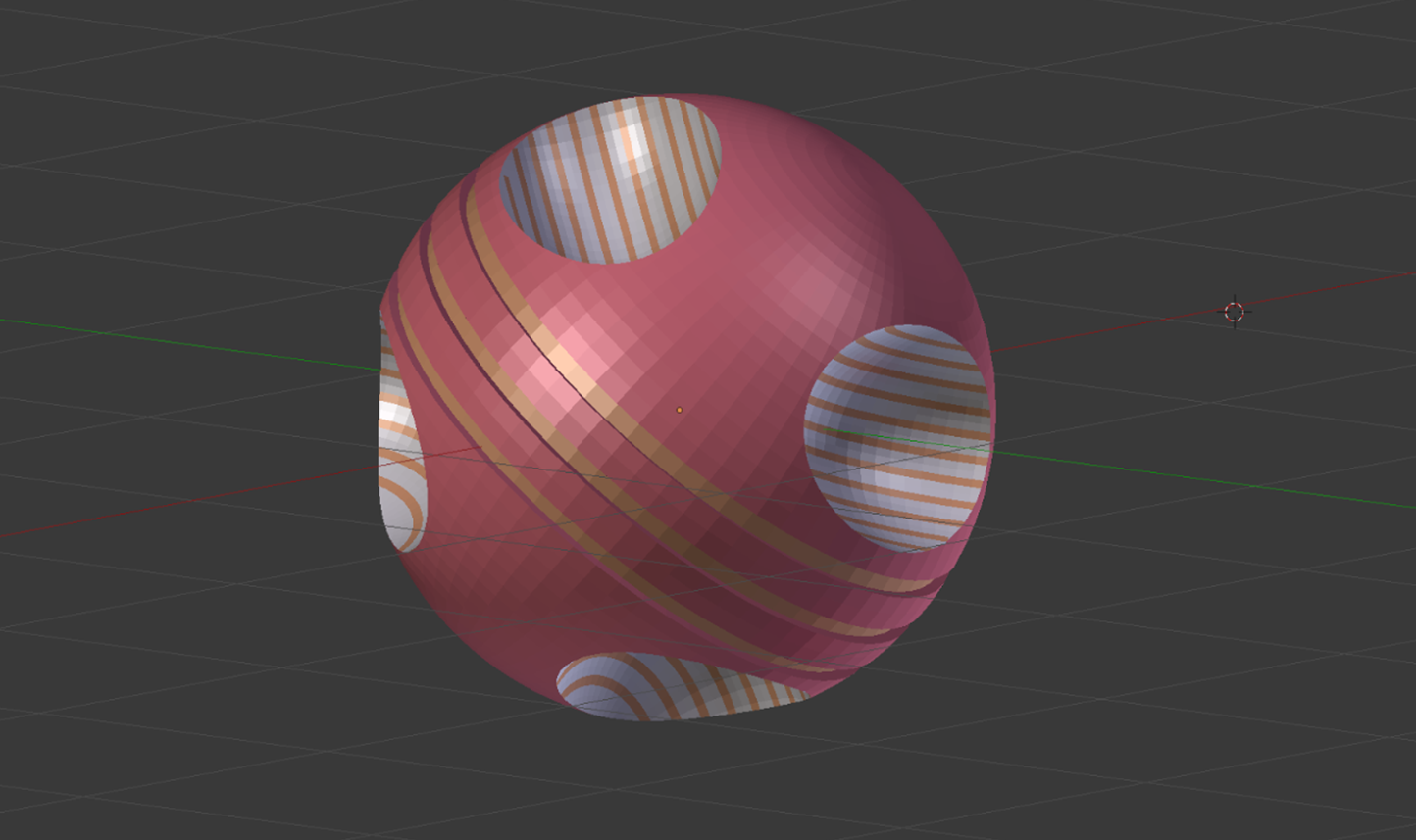

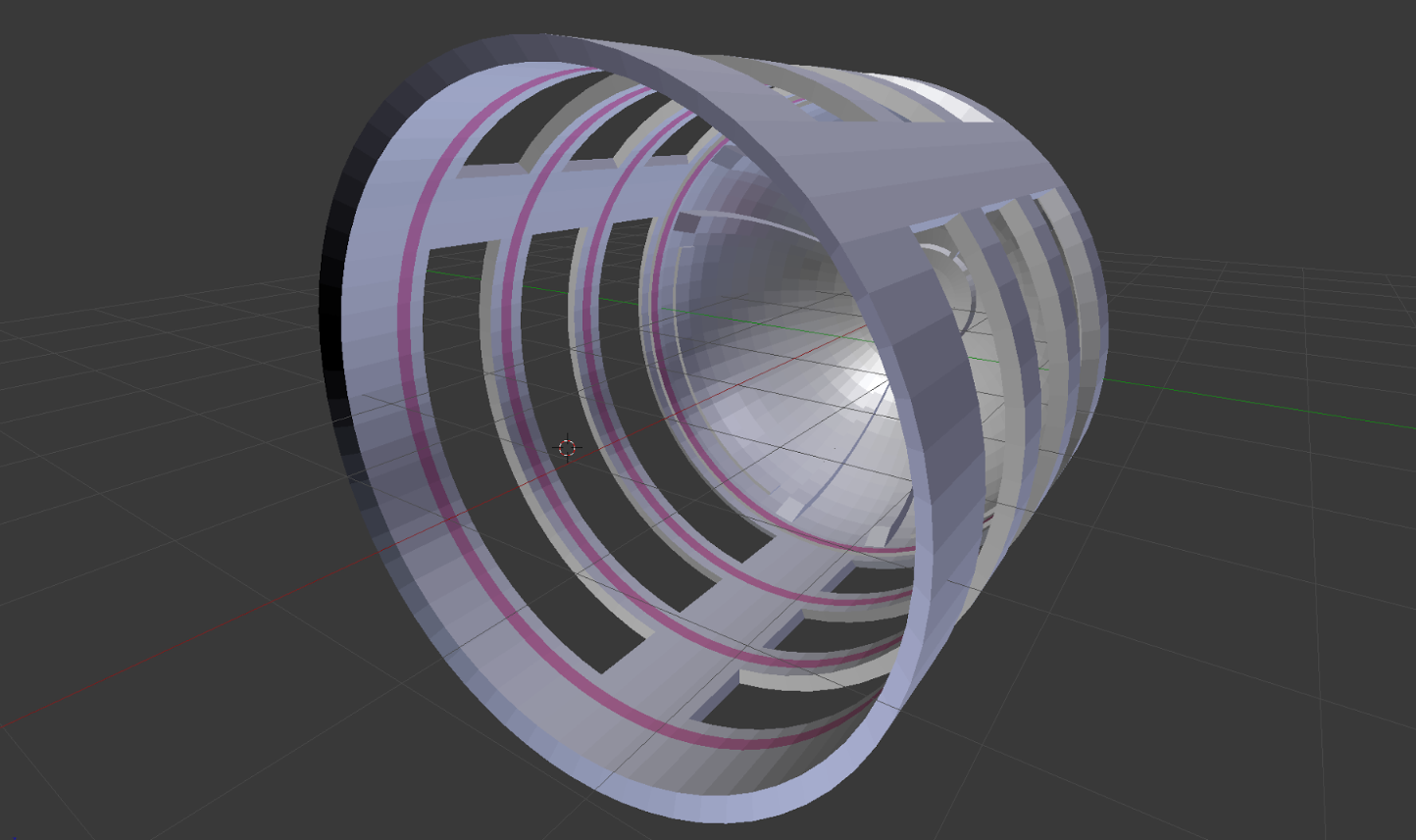

One area I wanted to explore was how the shape of an object could telegraph how it should be held to the user. Luckily, industrial designers have been doing this for a long time, coining the term ‘affordance’ as a way of designing possible actions into the physical appearance of objects. Just like the shape of a doorknob suggests that you should grab it, virtual objects can give essential interaction cues just by their form alone.

For the projectiles you grab and throw in the Training Room, I first tried uniformly colored simple shapes – a sphere, a cube, a disc – and watched how people approached grabbing them. Without any affordances, users had a hard time identifying how to hold the objects. Once held, many users closed their hands into fists. This makes throwing more difficult as the object could become embedded into your hand’s colliders after you release it.

Taking cues from bowling balls and baseball pitcher’s grips, I added some indentations to the shapes and highlighted them with accent colors.

Image may be NSFW.

Clik here to view. Image may be NSFW.

Image may be NSFW.

Clik here to view.

Image may be NSFW.

Clik here to view.

Image may be NSFW.

Clik here to view.

This led to a much higher rate of users grabbing by placing their fingers in the indents and made it much easier to successfully release the projectiles.

Image may be NSFW.

Clik here to view.

The Interaction Engine’s Sliding Window

Within the Leap Motion Interaction Engine, developers can assign Interaction Materials to objects which contain ‘controllers.’ Each controller affects how the object behaves under certain conditions. The Throwing Controller decides what should happen when an object is released – more specifically, in what ways it should move.

There are two built-in controllers, the PalmVelocity controller and the SlidingWindow controller. The PalmVelocity controller uses the velocity of the palm to decide how the object should move, to prevent the fingers from imparting strange and incorrect velocities to the object. The SlidingWindow controller takes the average velocity over a customizable window before the release occurred.

In the Training Room, I used the SlidingWindow controller and set the window length to 0.1 seconds. This seems to work well in filtering out sudden changes in velocity if a user stops their hand slightly before actually releasing the object.

Designing the Room

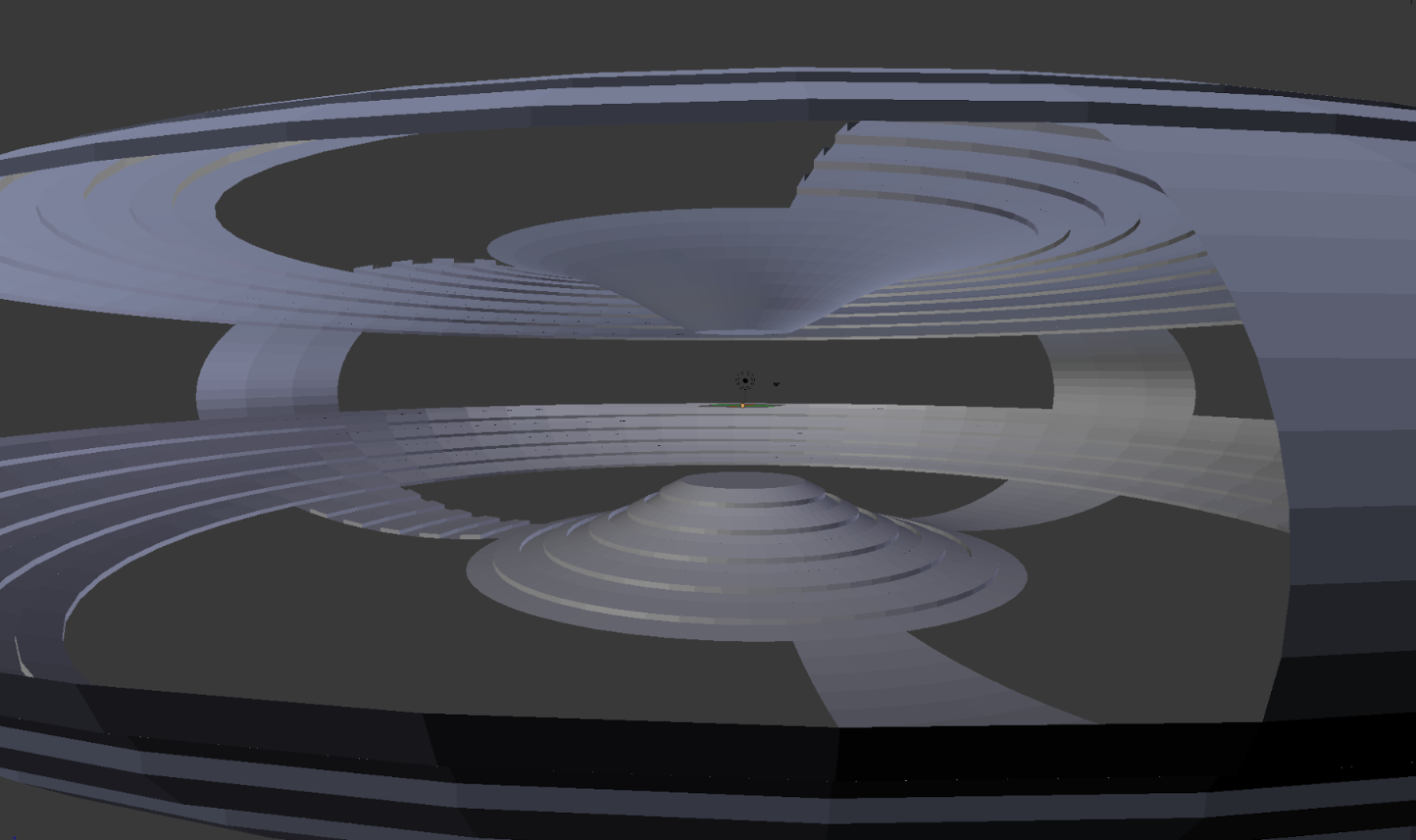

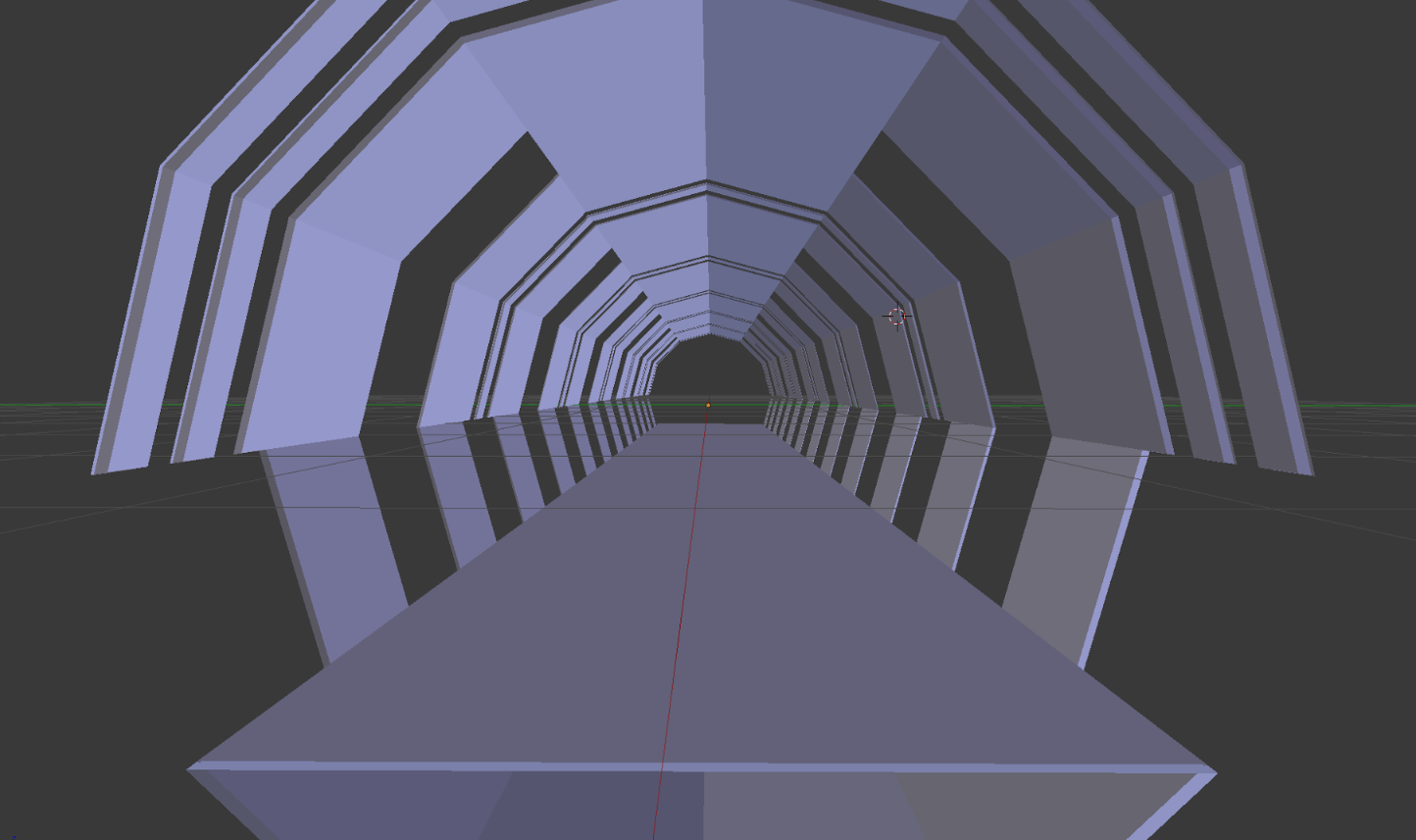

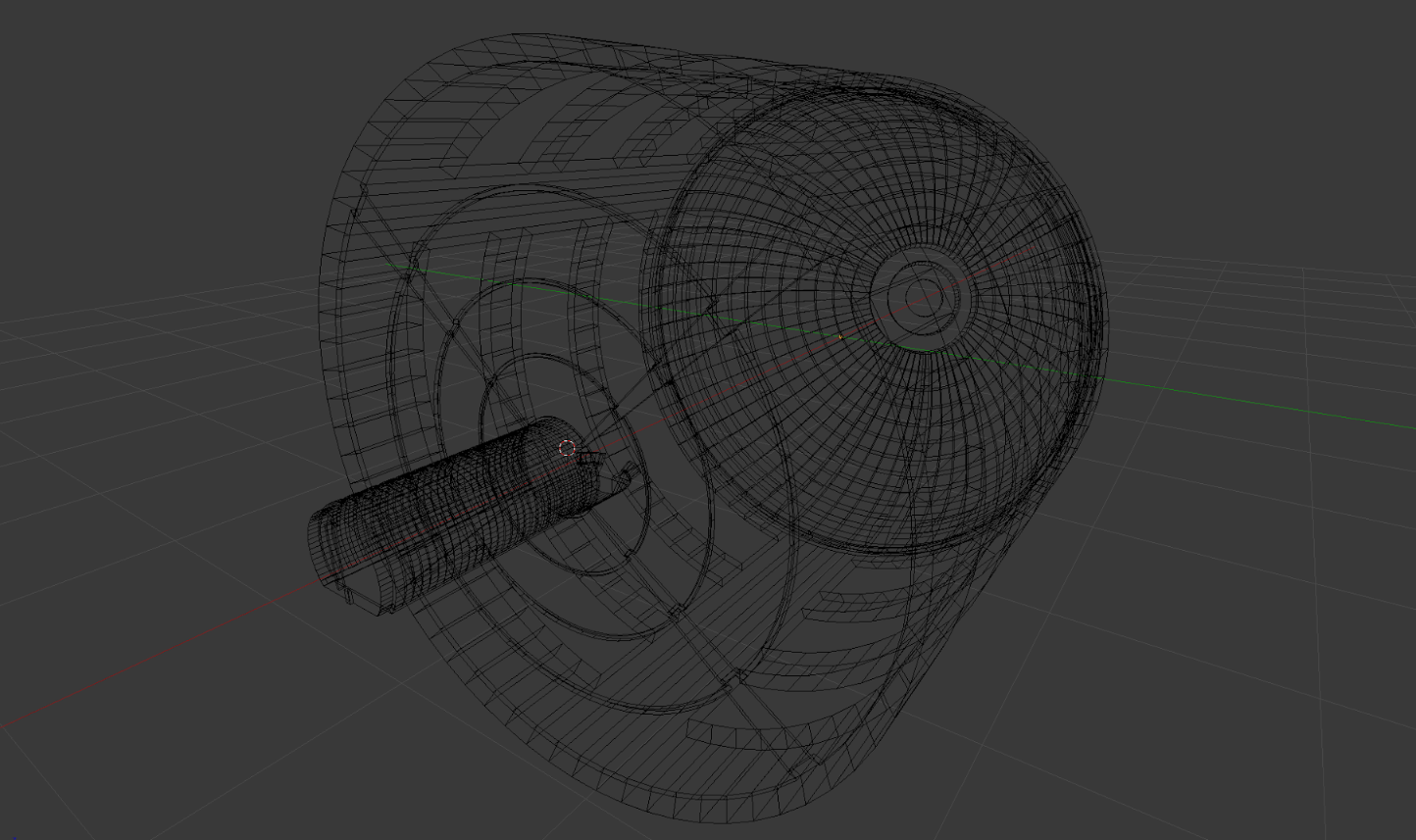

After honing in on the interaction of grabbing and then releasing a floating object toward a target, I began putting together some massing studies for environments I thought might be interesting to house the experience:

Image may be NSFW.

Clik here to view.

Image may be NSFW.

Clik here to view.

I tried some 360 environments, but I found I spent more time looking around trying to keep track of the targets than I did focusing on the interactions. Swinging in the other direction, I tried some linear tunnel-like spaces that focused my view forwards. While definitely more manageable, the smaller space for throwing meant I’d often unintentionally bounce projectiles off the walls. Whether or not I hit a target was more due to chance than intention.

Image may be NSFW.

Clik here to view.

Image may be NSFW.

Clik here to view.

I settled on a combination of the two extremes – a very wide cylinder giving the user 180° of open space with targets both above and below the platform they’re standing on.

Pinch and Drag Sci-Fi Interface

After a bunch of testing, I found that although my throwing had become pretty accurate, once the projectiles left my hand it was mainly a waiting game to see whether or not they would hit the target.

To turn this passive observation into an active interaction, I added the ability to pinch in the air to summon a spherical thruster UI. By dragging away from the original pinch point, the user can add directional thrust to the projectile in flight, curving and steering it towards the target. You can even make it loop back and catch it in your hand!

Since you’re essentially remote-controlling a small (and sometimes faraway) object, I tried to add as many cues to help convey the strength and direction of thrust:

- A line renderer connecting the sphere center to the current pinch point, which changes color as the thrust becomes stronger

- Concentric spheres which light up and produce audio feedback as the pinch drags through them

- The user’s glove also changes color to reflect the thrust strength

- The projectile itself lights up when thrusting

- The speed, density, and direction of the thruster particle system is determined by thrust strength and direction

On the menu behind the player there’s also a slider to adjust the thruster sensitivity. Setting it to max allows even small pinches to change the trajectory of the projectile greatly. The tradeoff is that it’s much more challenging to control.

Image may be NSFW.

Clik here to view.

I hope you enjoy Weightless and the Training Room as much as I enjoyed building them. Each of the 5 levels saves the best times locally, so let me know your fastest times! Adding Interaction Engine support to your own project is quick and easy – learn more and download the Module to get started.

The post Weightless Remastered: Building with the Interaction Engine appeared first on Leap Motion Blog.